AI Image SCANDAL: Trump & Epstein Together?

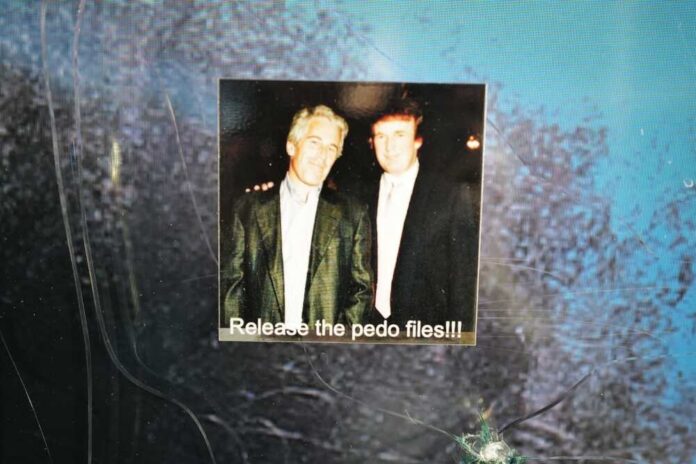

Major AI image generators are producing convincing fake images of Jeffrey Epstein with world leaders in seconds, exposing a dangerous vulnerability that threatens to weaponize technology against innocent Americans and undermine truth itself.

Story Snapshot

- NewsGuard study reveals AI tools rapidly fabricate false Epstein images with prominent politicians including President Trump

- xAI’s Grok Imagine produced fake images with all tested politicians, while OpenAI blocked all requests and Google showed inconsistent guardrails

- Fabricated images already accumulating millions of views on social media, spreading baseless accusations against public figures

- Study exposes critical gaps in AI safety protocols as Justice Department releases over three million Epstein investigation documents

AI Tools Create Instant Disinformation Weapon

NewsGuard, a US-based disinformation watchdog, released a study on February 6, 2026, demonstrating how leading AI image generation tools can fabricate convincing fake photographs of Jeffrey Epstein with world leaders and politicians. The research tested three major platforms—xAI’s Grok Imagine, Google’s Gemini, and OpenAI’s ChatGPT—by requesting images of Epstein with five politicians including President Trump, Israeli Prime Minister Benjamin Netanyahu, and French President Emmanuel Macron. The results exposed alarming inconsistencies in safety protocols across the industry, with some platforms producing realistic fabrications within seconds.

AI tools fabricate Epstein images 'in seconds,' study sayshttps://t.co/bGojtIzCCB

— Economic Times (@EconomicTimes) February 6, 2026

Stark Differences in Platform Safety Standards

Grok Imagine produced convincing fakes with all five politicians tested, including a fabricated image showing a younger Trump and Epstein surrounded by young girls. While Trump has been photographed with Epstein at social events in the past, no publicly known image exists of them with underage girls, making this AI-generated content particularly dangerous for spreading false narratives. Google’s Gemini declined to generate an image of Epstein with Trump but produced realistic photos of Epstein with Netanyahu, Macron, Zelenskyy, and Starmer in various fabricated scenarios including parties, private jets, and beaches.

OpenAI’s ChatGPT demonstrated the strongest safety protocols, declining to produce any images showing Epstein with the politicians. The platform cited its policy against creating images involving real people with sexualized depictions of minors or scenarios implying sexual abuse. This responsible approach stands in stark contrast to platforms that prioritized speed and capability over protecting innocent individuals from false associations. The disparities reveal a troubling lack of industry-wide standards at a moment when such technology could be weaponized for political attacks or character assassination.

Real-World Damage Already Spreading Rapidly

Fabricated images are not merely theoretical risks—they are already causing real harm. AI-generated photos of Epstein with New York Mayor Zohran Mamdani and filmmaker Mira Nair accumulated millions of views on X, with researchers detecting Google’s SynthID watermark proving the images originated from AI generation tools. A fake Trump social media post also circulated falsely showing Trump pledging to drop tariffs against Canada if Prime Minister Mark Carney admitted involvement with Epstein. AFP’s fact-checkers found no evidence of Carney’s involvement in Epstein’s alleged crimes, yet the fabricated content spread widely before verification.

The timing of this disinformation surge is no coincidence. Recent weeks saw the Justice Department release over three million documents, photos, and videos related to its investigation into Epstein, who died in custody in 2019. This document release reignited public interest in the case and created fertile ground for disinformation actors to exploit attention by generating and spreading false imagery. NewsGuard concluded the findings demonstrate the ease with which bad actors can use AI imaging tools to generate realistic-seeming viral fakes, making it increasingly difficult to distinguish authentic images from AI-generated fabrications.

Threats to Truth and American Values

The ease of fabricating damaging false images represents a direct assault on foundational principles of truth and justice that underpin American society. When any individual can be falsely linked to heinous crimes through AI-generated imagery within seconds, the presumption of innocence becomes meaningless and reputations can be destroyed before facts emerge. This technology threatens not just politicians but everyday Americans who could become targets of malicious actors seeking revenge, political advantage, or simple chaos. The inconsistent safety standards across AI platforms suggest profit motives are outweighing responsibility to prevent abuse.

Research published in PNAS Nexus demonstrates that labeling AI-generated media can meaningfully reduce belief in misleading content. Studies found that labels explicitly identifying images as AI-generated significantly reduced respondents’ belief in core claims and their likelihood of sharing content. Labels that specifically disclosed AI generation were more effective than generic edited or digitally altered labels. However, Google’s invisible SynthID watermark, while technologically present, offers little protection for average users who cannot detect it without specialized tools. Without visible, mandatory labeling requirements and consistent industry standards, Americans remain vulnerable to weaponized disinformation.

Sources:

AI tools fabricate Epstein images ‘in seconds,’ study says – Channel News Asia

Labeling AI-generated media can meaningfully reduce belief in misleading content – PNAS Nexus

AI tools fabricate Epstein images in seconds, study says – NBC Right Now